Snowflake Data Cloud: Path to Redefining Data Modernization

Home » Blogs » Data Analytics » Snowflake Data Cloud: Path to Redefining Data Modernization

Dr. Hassan Sherwani

Data Analytics Practice Lead

March 5, 2024

Data is integrated into all organizational processes, from predictive modeling to AI automation. With the vast volumes of data now used across all functions, enterprises require robust data warehousing solutions that not only act as storage but are also capable of being quickly accessed and utilized across multiple business use cases.

Legacy data warehouses struggle to keep up with modern data demands, including the ability to extract data across multiple data formats. High maintenance costs, rigid architecture, high failure rates, and data quality concerns are some of the major cons of traditional on-prem data warehouses.

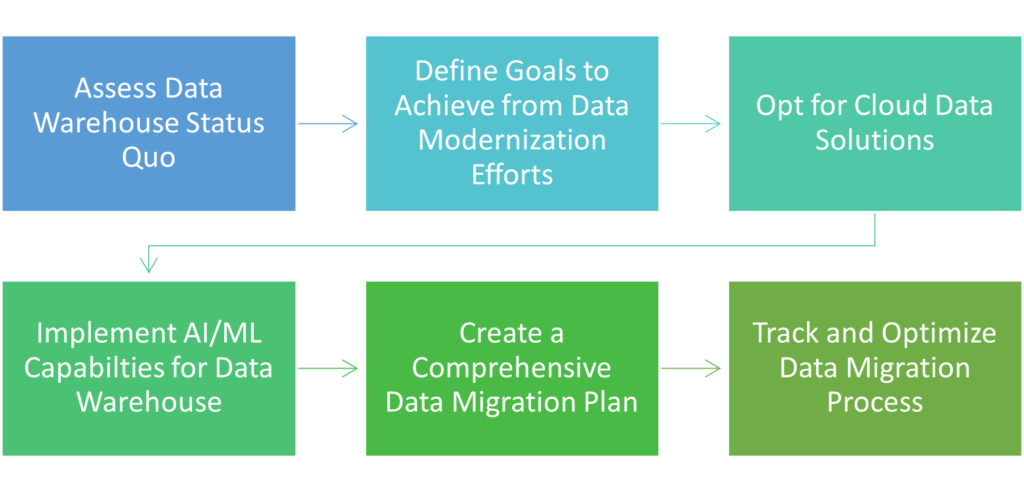

Here are some of the stages organizations need to execute to achieve successful data modernization:

As stated previously, most enterprises prefer to migrate to cloud data warehouses. In this blog, we highlight why it is best to consider Snowflake as the ideal choice for data warehouse modernization.

Why Choose Snowflake for Data Warehouse Modernization?

What is Snowflake Data Warehouse?

Snowflake is a fully managed, cloud-native data warehouse with a highly scalable platform that separates storage and compute resources. Snowflake supports standard SQL and seamless integration with existing BI and ETL tools. With features like automatic scaling, data sharing, and native support for semi-structured data, Snowflake aims to help companies move on from traditional enterprise data warehouses (EDW) and unify the siloed worlds of structured EDW and semi-structured data lakes capability into a single cloud data platform.

How is Data Stored on Snowflake?

What Makes Snowflake Data Cloud the Right Choice for Data Warehousing?

- Security and Compliance: Snowflake’s robust security features, including encryption, access controls, and monitoring, safeguard data at every level. Multi-factor authentication adds an extra layer of protection without sacrificing usability, allowing easy access via single sign-on (SSO) and federated authentication.

- Governance: Snowflake ensures transaction consistency and accuracy even during unexpected events, while its micro-partitioning system enhances performance by organizing data into highly efficient storage units. With a comprehensive metadata service, Snowflake enables detailed tracking of data origins, interactions, and relationships, facilitating efficient query compilation and data management.

- Multi-Cluster, Shared Data Architecture: Snowflake’s shared data architecture simplifies architecture, reduces costs, and ensures a single version of truth. Snowflake offers faster data exploitation and consumption, combining the capabilities of data lake, EDW, and data marts into a single SQL-based solution with virtually unlimited storage and computing.

- Scalability and Performance: Virtual warehouses in Snowflake can be easily adjusted for compute capacity and scaled dynamically while queries are run simultaneously across other warehouses. As an MPP system, it enhances query run time through scale-up by adding nodes to individual clusters. It offers scale-out for concurrency by creating additional independent clusters (virtual warehouses). Additionally, Snowflake offers auto-scaling options for multi-cluster warehouses.

- Workload Isolation and Cost Management: Its separation of computing and storage ensures robust workload isolation and cost controls, while each independent virtual warehouse allows tailored sizing and scaling to match workload demands. Cost optimization features like auto-suspend and pass-through storage charges further minimize expenses.

- Data Ingestion: Snowflake’s independent compute clusters facilitate near real-time data loading and reporting without contention, while its serverless ingestion service, Snowpipe, seamlessly loads data into your cloud storage environment.

- Snowflake Data Marketplace: Snowflake’s Data Marketplace provides a secure alternative to traditional data-sharing methods, eliminating the need for cumbersome and risky physical data copies. Authorized users can securely access live, read-only data subsets, enabling seamless sharing and integration for deeper insights and data-driven decision-making without data movement or replication.

- MultiCloud Capabilities: Snowflake’s cross-cloud capability addresses these challenges by securely sharing data across regions and cloud accounts, facilitating unified data management, and enabling organizations to leverage a single source of truth for decision-making and business continuity.

A Quick Guide to Data Migration from Legacy Data Warehouses

When migrating, companies must juggle business priorities, goals, architecture needs, and cloud strategy. What makes data modernization truly complicated for large enterprises running for years is the multiple cycles of updates and redesigns their data has already been through. In addition, they also must store historical data to meet regulatory requirements.

While the lift and shift approach is often touted as the best solution to overcome these challenges, one must first consider their historical data environment better to choose the right migration approach. Several best practices need to be kept in mind for migrating data from legacy platforms to Snowflake:

- Data Extraction: To efficiently extract data from the source system, leverage read-only instances, using native extractors, and staging extracted data carefully to avoid corruption, thus accelerating throughput and ensuring data integrity.

- Transfer Data to the Cloud: When transferring data to the cloud, identify optimal transfer windows, maximize available bandwidth using compressed files, and consider device-based transfer for large volumes and tight timelines.

- Upload to Snowflake Data Cloud: When uploading data to the Snowflake Data Cloud, utilize native Snowflake data loader utilities for efficient data loading, implement separate data warehouses cost-effectively, and ensure proper file size and partitioning for optimal throughput and organization.

- Data Validation: When validating migrated data, perform checksum-based and referential integrity checks and ensure the proper functioning of analytics and reporting tools in the new environment to maintain high-quality, validated data essential for reporting and AI/ML workloads.

From On-Prem to Snowflake: What You Need to Know

When it comes to data migration from on-prem to the Snowflake Cloud Data platform, it is essential to consider the amount and frequency of the data that needs to be transferred, the available tools and resources, and the desired performance and reliability.

To migrate data from external on-premises sources to Snowflake, companies have several options available:

Snowsight:

To migrate data from external on-premises sources to Snowflake, companies can utilize Snowsight or Classic Console. Snowsight Data Loading can quickly load data from local machines or existing stages into existing tables or create new tables based on file metadata schema detected by Snowsight, supporting files up to 250 MB in size.

Snowpipe:

Snowpipe swiftly loads data from staged files using a named Snowflake object called a pipe, supporting various detection mechanisms like cloud messaging and REST API calls. It integrates seamlessly with primary cloud storage services and utilizes Snowflake-provided compute resources.

Watch our webinar, discussing how companies can migrate data from SQL servers to Snowflake using Snowpipe.

Snowpipe Streaming:

Snowpipe Streaming differs from Snowpipe by loading data in rows directly into Snowflake tables, eliminating the need to stage files. It requires a custom Java application for data pumping and error handling, unlike Snowpipe, which has no third-party software requirements.

SnowSQL:

SnowSQL simplifies interaction with Snowflake, enabling easy data migration from existing databases.

- First, export data to compatible file formats like CSV.

- Next, upload these files to a cloud storage stage accessible by Snowflake, such as Amazon S3 or Azure Blob Storage.

- Finally, use the COPY command to load data from the stage into Snowflake tables for analysis and manipulation via SQL queries.

Companies can use these options to migrate their data on-prem to the Snowflake data cloud platform.

How Royal Cyber Can Help

Author

Priya George

Migrate to Snowflake Data Cloud Today

Recent Posts

- Selecting the Ideal Front-End Commerce Architecture with commercetools January 17, 2025

- Optimizing Database Footprint in ServiceNow For a Healthcare Service Provider January 17, 2025

- Playwright vs Selenium: Which Is Best for 2025? A Detailed Comparison January 17, 2025

- Integrating Royal Cyber’s 3DDD Plus with BigCommerce January 17, 2025

Recent Blogs

- An Insight into ServiceNow Hardware Asset Management (HAM) Ramya Priya Balasubramanian Practice Head ServiceNow Gain …Read More »

- Learn to write effective test cases. Master best practices, templates, and tips to enhance software …Read More »

- In today’s fast-paced digital landscape, seamless data integration is crucial for businessRead More »