Anthos vs. Kubernetes: What’s the Difference?

Written by Priya George

Content Writer

May 24, 2022

Containerization is often the key to a successful migration from on-prem data centers and servers to cloud service platforms such as AWS, Azure, and Google Cloud Platform. By packaging the source code of legacy applications and dependencies, containers are lightweight means of migration of resources. However, the problem kicks in when there are multiple container applications to migrate and manage. This problem is further complicated with the onset of microservices architecture; thus, developers handling large systems will have to overlook hundreds of containers. This is where container orchestration technology proves to be essential.

Docker Swarm and Apache Mesos are examples of tools that create clusters to manage containerized applications. However, Kubernetes, developed by Google in 2015, has become one of the most popular open-source tools for creating clusters for containers.

Understanding Kubernetes’ Role in Container Orchestration

Today Kubernetes a.k.a K8 has become the popular choice for setting up and deploying cloud-native infrastructure. It allows developers a framework to run distributed systems efficiently through scaling, failovers, and deployment patterns. With Kubernetes, developers can:

- Automate bin packing for container CPU and storage resources

- Prevent container failures

- Update sensitive information and application configurations with zero disruption

- Expose containers and balance traffic load easily

- Mount the storage orchestration platform of their choice

- Automate the process of container rollouts and rollbacks

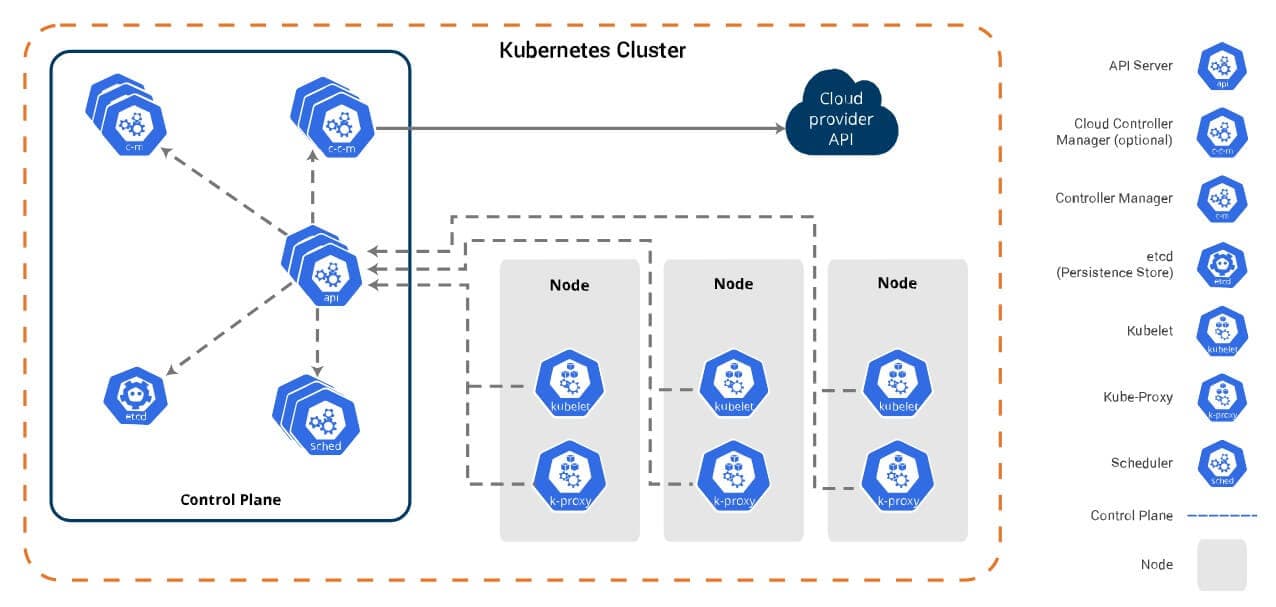

No component within Kubernetes holds as much importance as the Kubernetes cluster. What is a Kubernetes cluster? It’s simple: when you deploy K8, you get a cluster. The nodes within this cluster run the container applications. With the help of the control plane, decisions can be made about various events, including cluster scheduling, or starting up a new pod. The following diagram gives a comprehensive overview of its components:

Download our whitepaper to learn more about how to manage applications successfully with the help of the Kubernetes Dashboard.

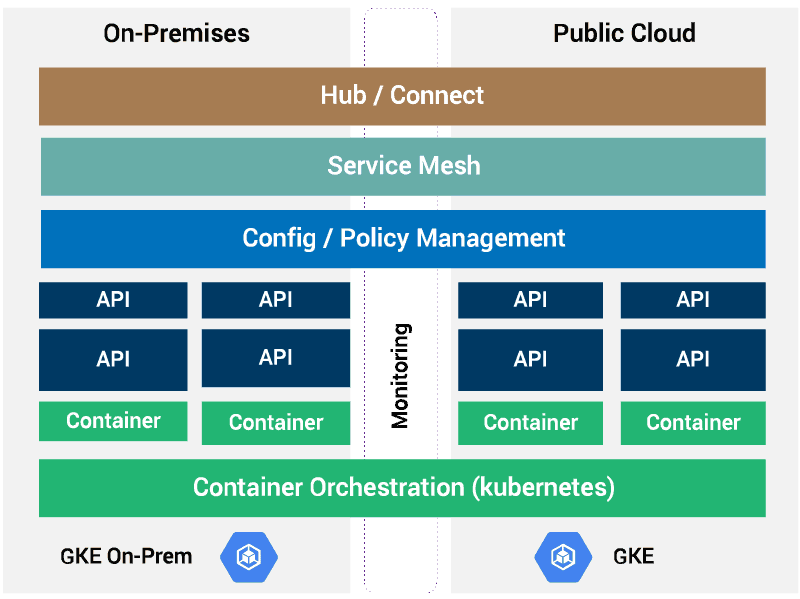

Container Orchestration with Google Anthos

With Anthos, Google Cloud Platform aimed to solve a problem IT departments were facing with the popularity of Kubernetes clusters and control planes: the need for a “meta” control plane for the control planes across all departments. And this control plane needed to have the ability to deploy and manage clusters across environments: on-prem, private/public cloud services platforms, and even hybrid cloud solutions and the same reliability as Google Cloud’s in-house K8 manager: GKE (Google Kubernetes Engine). There are a few crucial pillars for Anthos to function successfully:

- Google Kubernetes Engine: GKE is central to the process of container orchestration on GCP. Anthos clusters are extensions of GKE for use on Google Cloud Platform, on-prem (Anthos on VMware), or multi-cloud (Anthos on AWS/Azure) to manage Kubernetes wherever teams intend to deploy their applications. And to manage these clusters across environments, Connect allows clusters across domains to be monitored and managed on a single dashboard.

- Anthos Service Mesh: Powered by Istio, Anthos Service Mesh can control planes to help monitor clusters, manage traffic between microservices, and provide the ability to apply network and routing policies across services.

- Anthos Config Management: With Anthos Config Management, developers can enforce uniform policies, configurations, and deployments across all Kubernetes clusters- managed or unmanaged. A single Git repository acting as the trustworthy source can monitor clusters to ensure no deviation.

- Cloud Run for Anthos: With Knative, developers can run serverless containers on Kubernetes. With the additional layer of Cloud Run for Anthos, they can run fully managed services Knative capabilities across hybrid and multi-cloud environments.

Google Cloud Anthos represents a solution for organizations that wish to migrate apps from on-prem or across cloud services platforms. Get directions on how to transfer legacy applications to GKE with Migrate for Anthos and deploy applications from AWS to GCP by downloading our whitepapers.

Google Anthos vs. Kubernetes: Understanding How They Differ

The key difference between the two is that Google Cloud Anthos is a step up from Kubernetes clusters; it helps manage the problem of multiple clusters. Whether you need Anthos or K8s depends on the number of clusters required to run services smoothly.

Kubernetes is a proper cloud-agnostic solution leader in Docker management. In addition, it possesses autoscaling, and self-healing is quick to set up and is a cost-effective solution. However, several snags are too: it is solely for managing infrastructure (a Container as a Service tool). It, therefore, needs more expertise to manage and poses difficulty for setting up on-prem clusters by itself due to high resource requirements. However, with Google’s Anthos, companies access a service that can enforce uniform policies, upgrades across multiple clusters, enable managed Kubernetes anywhere, and direct access to Google SREs for troubleshooting and remediation. But since it provides managed services, many companies will find the solution too expensive.

Therefore, choosing to upgrade to Google Cloud Anthos will depend on the number of resources and location. Anthos is only viable for organizations with countless clusters spread out across hybrid and multi-cloud environments and is facing difficulty managing these multiple clusters and control planes. In addition, it is required for companies to balance multiple microservices, such as an e-commerce site. In contrast, Kubernetes can be a simple solution for a small company that wishes to create efficient container applications on the same cloud service platform.

How Royal Cyber Can Help Out

In conclusion, while there are operational differences between Anthos and Kubernetes, Anthos is better understood as the next step to managing Kubernetes clusters. These two platforms serve the vital role of building cloud-native infrastructure with minimum disruption. The choice between these two services comes down to the level of management your business needs regarding the desired level of container orchestration. Whichever platform is your choice, Royal Cyber experts have got you covered.

Get in touch with our Google Cloud Platform experts for guidance on building robust cloud-native platforms for your organization. Collaborate to learn which platform makes the most sense for your business needs.

For more information, contact us at [email protected].

Recent Blogs

- An Insight into ServiceNow Hardware Asset Management (HAM) Ramya Priya Balasubramanian Practice Head ServiceNow Gain …Read More »

- Learn to write effective test cases. Master best practices, templates, and tips to enhance software …Read More »

- In today’s fast-paced digital landscape, seamless data integration is crucial for businessRead More »