How Can Apache Airflow Help Data Engineers?

Written by Hafsa Mustafa

Content Writer

August 24, 2022

Data engineers are responsible for creating and maintaining data frameworks that provide critical data for data scientists and analysts to work with. Platforms like Apache Airflow makes data orchestration and transformation easier for data engineers by automating the authoring, scheduling, monitoring, and deployment of data pipelines.

Before the arrival of Airflow, only simple scripts could be written for running pipelines, be they for loading, extraction, or transformation of data. As data pipelines became more complicated with time, it became difficult for conventional schedulers to write complex pipelines. In addition, previously, schedulers could not trace the changes in the workflows, nor could they handle the dependent jobs optimally. Since the log outputs were not kept in a centralized place, it also was not easy to navigate them for transparency purposes. Another major issue with working with conventional schedulers was task status tracking. It was difficult, for example, to find out which task was taking longer than usual to complete.

This blog will shed light on the architecture and workings of Apache Airflow to highlight how you, as a data engineer, can benefit from using Airflow as your data pipeline management system.

What is Apache Airflow?

Apache Airflow is open-source software that simplifies workflow management for data engineers. It can be described as a data orchestration tool that allows data engineers to manage and monitor workflows in an improved manner. This platform was launched by Airbnb in 2014 and was taken on as a project by Apache Software Foundation in 2019.

Airflow automates the scheduling and running of data pipelines. The workflows are read as codes in Python programming language and presented in the form of Directed Acyclic Graphs (DAGs). A Directed Acyclic Graph refers to a collection of tasks presented in a way that their relations are discernible. The term DAG connotes that it flows in one direction only, meaning it is unidirectional and does not make a loop. The nodes in a DAG represent the tasks, and the edges represent the dependencies. Let’s go through the architecture of airflow to get a better understanding of how it functions and how it can simplify data engineering.

Apache Airflow Architecture

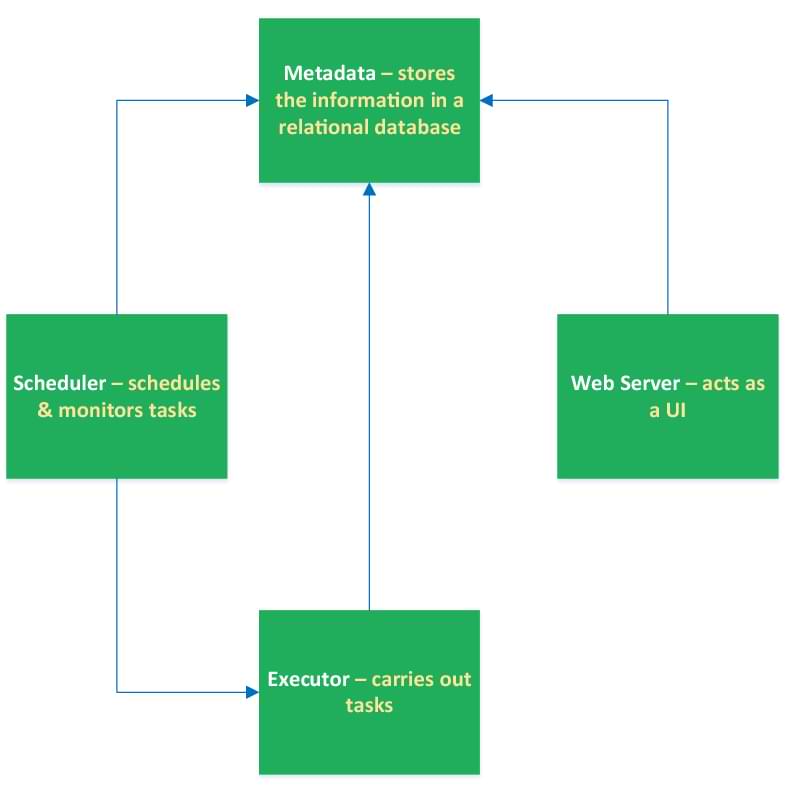

The core components of Apache Airflow can be summarized in the following points:

- Metadata

- Scheduler

- Web Server

- Executor

Metadata forms the core and the most critical part of the Airflow architecture. As depicted by its name, it stores all the crucial information about the DAG runs and the record of the previous tasks. It is a relational database that keeps the information about the environment’s configuration, the status of different running tasks, and task instances.

Airflow Scheduler acts like an executive as it is responsible for monitoring the tasks and running their instances. To activate the Scheduler, you need to execute it first. It should be mentioned that the Scheduler automatically runs the job instance once the time stamp runs out or concludes. It also decides the priority of the tasks in terms of execution.

The Web Server hosts the front end of the Airflow. It can be described as the interface a user interacts with while using the management system. It communicates with metadata with the help of some connectors. The Web server is a flask application that helps the users visualize the status and components of DAGs and assess the overall health of a task.

The final component is the Executors. It, understandably, carries out the tasks that have been triggered by the Scheduler. It operates by defining the workers that actually get the job done. The sequential executor is the default executor in Airflow and is fully compatible with SQLite. However, you can also other executors like Celery and Kubernetes.

Variations in Architecture

The Airflow architecture comes in two types:

- Single Node

- Multi-node

If a user is running a moderate number of DAGs, the single node architecture should work fine for them. In such a case, all the components of the system will be connected to that single node. However, if you are handling big data, you may need to work with the multi-node architecture. In such an architecture, the Web Server and the Scheduler reside on the same node, but the workers are located on different nodes. The most suitable executor for this architecture is Celery.

Apache Airflow & Data Engineering — a Quick Overview

Here’s how Apache Airflow is benefitting the data engineering sector:

- Airflow keeps an audit trail for all the performed tasks

- Goodbye to Cumbersome Data Orchestration

- It is highly scalable — you can include as many workers as you like

- You can easily manage and monitor workflows

- Easy to use — uses standard Python language only

- It is a free and an open-source management system

- You can set up alerts for your pipelines to stay informed

- Readily integrates with Talend if needed to run jobs

- Airflow’s analytical dashboard lets you get the bigger picture of how things are turning out

Conclusion

In this blog, we discussed the basic structure of Apache Airflow and how it can be used in improving the working of data pipelines. If you have further questions about this workflow management software, you can contact the Royal Cyber data engineering expert team to get your queries answered.

Recent Blogs

- An Insight into ServiceNow Hardware Asset Management (HAM) Ramya Priya Balasubramanian Practice Head Service Now …Read More »

- Learn to write effective test cases. Master best practices, templates, and tips to enhance software …Read More »

- In today’s fast-paced digital landscape, seamless data integration is crucial for businessRead More »